During his election, Barack Obama raised $690 million in online fundraising. The majority of this money came from email marketing.

What was his secret? Obama’s team obsessed over email A/B testing.

In one A/B test, Obama’s team found that one subject line generated $403,600 in donations, whereas another variation generated $2,540,866.

That’s a $2.1 million difference.

Seems like David Ogilvy was right when he said “When you’ve written your headline, you’ve spent eighty cents out of your dollar”.

Hopefully, those numbers speak for themselves as to why email A/B testing is worth talking about.

In this post I want to deconstruct what’s testable in email marketing and go through some powerful A/B tests that you can run to increase your open rates, click-through rates, and of course, conversion rates.

The 4 ½ Testable Components of Email Marketing

There are four (and a half) broad components to email marketing that are testable.

1. Your subject line

2. You (the sender)

3. Your content

4. Delivery time

The half point? Audience context.

While a bit trickier to split test, the context of why your subscribers are on your mailing list, and the relationship they have with you, plays a huge role in their likelihood of opening, clicking, and converting.

Through careful segmentation, we can experiment with this to understand how building trust and offering value ahead of time impacts the success of future emails.

But before I get ahead of myself, we first need to ensure that our email marketing software enables thorough A/B testing.

Getting the Right Email Software for AB Testing

Surprisingly, not all email marketing software makes A/B testing easy.

If you ask me, this is nuts. It’s one of the easiest ways for email software providers to improve the performance of their user’s campaigns, increasing their likelihood to continue as paying customers.

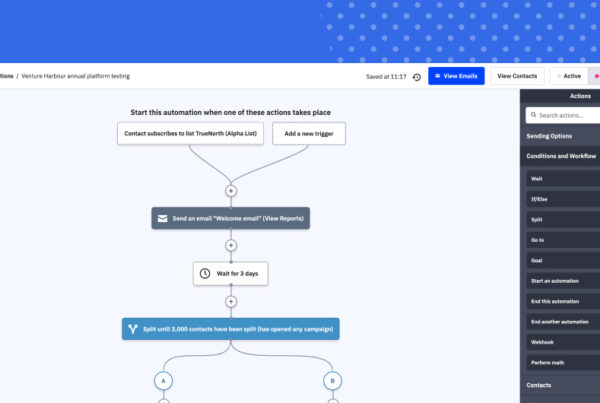

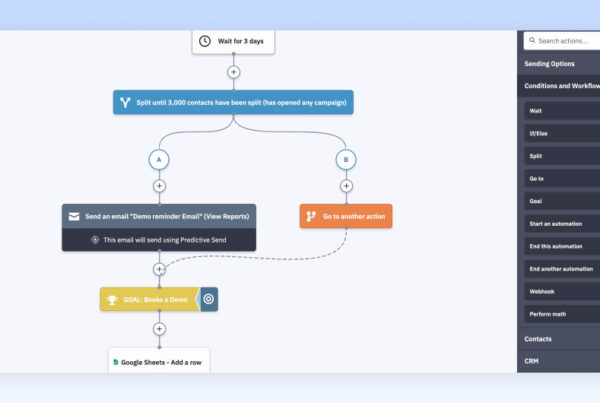

Thankfully though, a few do make it easy. There’s a detailed comparison of email software providers here, but the quick summary is that if you want A/B testing, I’d recommend GetResponse or ActiveCampaign.

I won’t go into the pros and cons here, but having used a myriad of tools (Mailchimp, Aweber, Sendy etc.), these are the only two that really let you go to town with A/B testing.

Once you’ve got a good email marketing tool that enables A/B testing, you can begin experimenting to find what does and doesn’t work.

So, let’s start off with one of the most important components: the subject line.

A/B Testing Your Subject Line

Last week, I ran an interesting subject line A/B test on a campaign that I sent out to subscribers of one of our music industry projects.

I created three subtly different variations. Despite the subtle differences, the winning variant outperformed the losing variant by 100%. It’s pretty amazing to see, empirically, how small differences can have such a huge impact.

A great subject line does three things, and three things only:

- It clearly describes the contents of the email

- It stands out in the inbox

- It compels the recipient to open the email

At a strategic level, that’s all there is to writing amazing subject lines. On a tactical level, each of these points could be a monster of a blog post in itself.

Here are just a few tactics that you can experiment with.

1. Personalisation – Anything that makes your email more relevant to the recipient will, generally, improve engagement. Using the recipient’s name or location in the subject line is an easy way to improve its relevancy.

2. Behaviour & contextual information – If you use an email marketing tool like Keap that allows you to gather behavioural data, you can make your subject lines hyper-relevant. For example, if a potential customer abandons the shopping cart, you could set up an email to be sent twenty minutes later with the subject “[Name], we noticed you left our site 20 minutes ago”.

3. Using symbols – 99% of subject lines are a combination of the same 26 letters and ten digits. Using non-standard characters, like ☞ or ☻, in the subject line is an easy way to catch the recipient’s attention.

4. Urgency – Economists have proven over and over again that loss aversion is a stronger motivator than the desire to gain. When you include phrases like don’t miss out or [24 hours only] in your subject lines, you’re tapping into the innate need to avoid loss.

5. Cliff hangers – Just like in all those dodgy TV soaps, when you build anticipation, it makes people want to know what happens next, or what’s inside.

6. Ask a question – Questions prime our brain for curiosity. Studies have found that even seeing a question mark stimulates our brain to come up with an answer.

7. Have a call to action – You may want to experiment with adding ‘click to find out’ or a similar call to action to see whether that prompts recipients to open your email.

8. Humour – Email subject lines tend to be quite dull. One way to stand out is to have a humorous subject line.

9. Offer incentives – What can you offer to sweeten the deal for the recipient? Why should they open your email?

10. Imply value – If you can imply that the recipient will benefit from opening your email, they’re more likely to open it.

We’re really only scratching the surface here. Just remember, anything that makes your emails stand out, compels the recipient to click, while clearly describing the contents is worth testing.

A/B Testing You

Don’t worry. When I talk about A/B testing you, I’m not suggesting we make clones or change your name…

That might be a little excessive for optimising open rates.

What I mean is playing around with the ‘from’ field. Mailchimp has suggested that, in some cases, the from field can be just as important as your subject line.

Remember the Barack Obama case study I mentioned earlier? Here’s one of my favourite email campaigns that his team sent out.

Toby Fallsgraff, the head honcho behind Obama’s email campaigns, said in an interview:

“The subject lines that worked best were things you might see in your inbox from other people. ‘Hey’ was probably the best one we had over the duration.”

What makes the email above so awesome is not just the subject line, but the combination of subject line and from field. If the from-field was ‘Team Obama’ or ‘Organizing for Action’, I doubt it’d be nearly as effective.

So, what can you test in the from field? Here are a few ideas:

- If you’re a company, experiment with your company name vs. name of your marketing person vs. name of your CEO / founder.

- Your full name vs. first name – Seth Godin likely gets a higher open rate using ‘Seth Godin’ opposed to just ‘Seth’. I’ve also found that the open rate is sometimes higher when using the first name only, as recipients confuse your email as being from someone else they know with the same name.

- Different email addresses – if you’re sending emails from noreply at yourcompany.com you may want to test this against name at yourcompany.com.

- Male vs. female – Depending on your audience, you may want to experiment with having a male vs. female sender.

- Name connotations – We associate certain connotations with names. As such, we’re likely to perceive an email about modern fashion from ‘Betty’ differently to one from ‘Jessica’. Depending on your audience, this may be worth experimenting with.

A/B Testing Your Content

I’m extremely skeptical about studies suggesting that visual content outperforms plain text or vis versa.

So much depends on the creator’s ability to write compelling copy, or design persuading visuals. As such, you can take any statistics you hear about one bettering the other with a cupboard full of salt.

There are two contradicting schools of thought at play here.

On the one hand, it’s hard to argue that a picture doesn’t convey a thousand words. Visual content tends to be better at influencing our emotions and conveying messages more efficiently. +1 for visual content.

At the same time, emails from our friends are written in plain text. Therefore we’re right to associate visual emails as being more likely to be promotional. Plain text is a more personal format for email marketing. +1 for plain text.

Here’s my perspective:

Step 1. Learn to write awesome copy.

Step 2. Learn to design awesome visuals (or hire someone who is).

Step 3. Experiment with visual content vs. plain text.

What I’m getting at is that if plain text works best for you, it’s probably because you’re an awesome writer, not because visual content sucks. If visual content works best, you can high five your designer (self high-five if it’s you).

Understand your strengths, and play to them.

A/B Testing Delivery Times

There are countless studies on the best times to send your emails. I’m going to reference precisely zero of them. Here’s why.

They’re based on averages across multiple niches, and as the adage goes, “averages lie”.

Combine this with the fact that when everyone adopts a best practice, it no longer remains a best practice, and these studies become almost entirely redundant for practical use.

So, how do you know when to send your campaigns? By running your own experiments.

One of the easiest ways to do this is with auto-responders.

If you set up auto-responders that get sent out X days after someone joins your list, your emails will eventually be sent out at different times on every day of the week.

You’ll soon reach a point of statistical significance, where you can see which times and days produce the highest engagement rates.

If you’re just sending a normal email newsletter, you should be able to A/B test different delivery times on a small portion of your list.

All in all, this is probably the easiest thing to split test.

A/B Testing Your Audience

The best online marketers don’t start A/B testing with A/B testing. They start by understanding their audience.

Here’s why.

You can have the greatest subject line and email content in the history of email marketing, but if you send it to a poorly qualified audience, it’ll produce poor results.

That’s why I think it’s important to talk about testing our audience, and seeing how different on-boarding methods influence the effectiveness of email marketing.

Identifying how trust influences email marketing effectiveness

As illustrated below, this is a great way to understand the impact of building trust with your mailing list subscribers.

It’s considered best practice not to sell anything to your subscribers when they first sign up. Here’s how you’d split-test that theory to know whether it holds true for you or not.

Let’s say that, from doing this, you find out that you have the highest conversion rate when sending one ‘trust building’ email to your subscribers before trying to sell something.

Now, you might want to identify whether the way in which your subscribers sign up to your list impacts that conversion rate.

Imagine that Audience A are people who signed up to your list from a blog post. Audience B are people who downloaded a free e-book, and audience C are people who signed up from your contact form.

With this data in hand, you know that people who sign up for a free e-book are most likely to convert into paying customers. You may then decide to increase the prominence of your free eBooks to drive more high-quality subscribers.

In an ideal world, you could take this concept to the extremes to find the best-performing email sequences for various demographic, behavioural, and contextual ‘buckets’ of subscribers. In reality, that’s probably overkill for everyone except the likes of Amazon and eBay.

In Summary

One of the founding principles of the field of science is to question everything.

When it comes to scientifically optimising your email campaigns, don’t rely on other studies. In fact, don’t even rely on anything mentioned in this post.

Set up your own experiments and find out what works for you.

Image Credits: Joe Crimmings, Justin Sloan